The AI cheating panic is missing the point

ChatGPT rolled out study mode partly to prevent cheating.

Image: supplied

Rachel Janfaza

Since ChatGPT hit the scene nearly three years ago, adults have been gawking over stories about Gen Z using artificial intelligence to cut corners in the classroom. “Everyone Is Cheating Their Way Through College,” New York Magazine proclaimed in one piece. The New Yorker followed up with a piece from Hua Hsu that opened with an undergraduate shamelessly bragging about his AI-generated essays.

Let’s get the obvious out of the way: Gen Z knows they shouldn’t use ChatGPT to flat out cheat, even if some of them do it anyway. ChatGPT knows this, too - and OpenAI rolled out a study mode partly to address concerns about its misuse. But the obsession with this topic is distracting from a more pressing question: What should students be using AI to do?

Members of my generation are well aware that AI is poised to remake the job market. We are constantly told that using it the wrong way will compromise our education and personal integrity - but also that if we don’t master it we’ll watch our careers become automated into extinction.

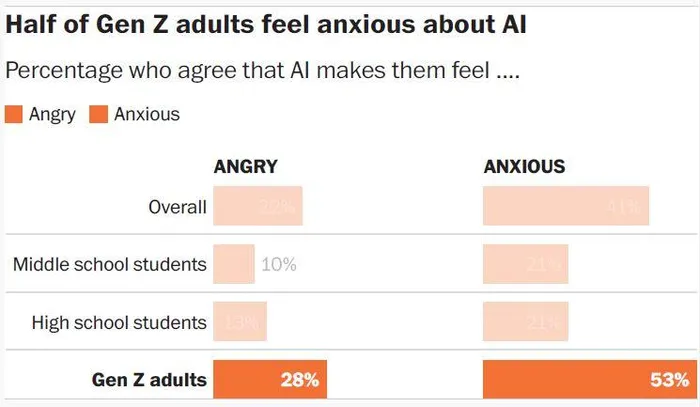

Graphic showing concern and anger over AI

Image: Supplied

In listening sessions, one-on-one conversations and surveys with young adults, Zoomers describe a complex relationship with AI: They use it daily, but they’re uneasy about its rise. Far from being enthusiastic early adopters, more than half of Gen Z adults said in a recent Gallup survey that AI makes them feel anxious. (The poll was conducted in collaboration with the Walton Family Foundation, which also supports my own research.)

Part of this anxiety is driven by the stigma around AI in schools, where the panic around cheating has left students unsure of when and how to incorporate it into their work, both in class and after they graduate.

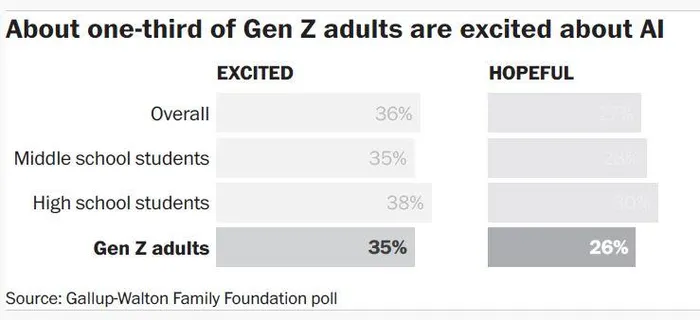

Graphic showing confidence in AI

Image: Supplied

Right now, the AI educational landscape looks like the Wild West. Last spring, 53 percent of K-12 students told Gallup that their school does not have a clear AI policy in place. The number is even higher outside metro areas, with 67 percent of students reporting a lack of clear guidelines - which risks further exacerbating digital divides between urban and rural communities.

Even just minutes from Silicon Valley, high-schoolers express frustration with murky school policies around AI. “You’d think in the Bay Area there would be a schoolwide policy,” Jaeden Pietrofeso, a recent graduate of Mountain View High School, told me last spring. “But no.”

Pietrofeso and some of his classmates have advocated for their school to make the rules of the road clearer so that students are confident if they’re crossing a line. But school officials are running into their own bumps along the way: Kip Glazer, Mountain View High School’s principal, told me educators are having a tough time reaching consensus amid the constant rollout and adoption of new AI features. Given the rapid speed of change, she said, many worry that “by the time they get the policy out, it will be outdated.”

Having easily referenced standards so that students know whether it’s okay to use AI to outline their class notes, or to go over a math homework question, or to suggest an essay topic would be helpful. But it’s only part of what students tell me they need. What they often want most are classes that don’t just acknowledge AI but also show them how to properly use it. Ohio State is now requiring students to take an AI fluency course, for example. That’s a step in the right direction.

Some students I’ve talked to say their professors ask students to use tools such as ChatGPT for research, especially in higher ed. But there are also art teachers who have asked them to use AI to help them brainstorm ideas for pieces and English teachers who ask them to generate writing prompts. “I’ve had multiple assignments that actually use AI,” one recent graduate from the University of Arkansas told me.

Schools that open channels to discuss AI with their students might also find the digital divide is smaller than they assume. When I spoke with college graduates in the Class of 2025, many of them shared the same concerns as older generations. While most used it for their college coursework, they also expressed fears about whether chatbots were making it harder to think for themselves or absorb their coursework. As one American University graduate put it to me, “AI made college easier and harder at the same time.”

Students would love to open up more about these trade-offs, but the taboo around AI in schools is making it harder for them to seek reassurance from grown-ups about whether they’re getting the balance right.

“I feel like there’s a lot of eyes on us,” one 17-year-old high school junior told me. “When anything new is introduced to the world, just like we see in history, youth make the pathway for the future. I just hope what we’re doing right now is not destructive.”

Whatever happens next, students know AI is here to stay.

Image: Supplied

Whatever happens next, students know AI is here to stay, even if that scares them. They’re not asking for a one-size-fits-all approach, and they’re not all conspiring to figure out the bare minimum of work they can get away with. What they need is for adults to act like adults - and not leave it to the first wave of AI-native students to work out a technological revolution all by themselves.

- Rachel Janfaza is the founder of Up and Up Strategies, a research and consulting firm focused on reaching Gen Z.